It’s reported, around 87% of companies use AI in their recruitment process. But AI also brings new risks: bias, lack of transparency on how a decision was made, data privacy pitfalls and even candidate fraud. That’s why it’s essential to understand where AI belongs in your hiring process and how it affects candidate trust before you chase the latest tech trend.7

In this blog, we explore:

- What the ethics of AI for recruitment look like in practice

- How complete reliance on AI tools for recruitment changes the way candidates perceive your organisation

- How to build and communicate a company policy on AI in hiring

- Practical steps to restore integrity to your hiring process in the age of AI

- Free Download of the Talent Labs AI in Talent Hackathon Insights report based on the experiences of real Talent Acquisition pros.

At Eploy, we believe AI should amplify human capability in recruitment, not replace it. While 87% of companies now use AI in their recruitment process, according to data from DemandSage, the real question isn't whether to use AI, but how to use it responsibly and keep humans firmly in control of every hiring decision.

What are the ethics of AI in recruitment?

Understanding the ethics of AI for recruitment is important because hiring decisions directly affect people’s lives and careers. When technology influences who gets an interview, a job, or even a rejection, employers carry a responsibility to make sure those systems are fair, transparent, and respectful of candidate rights and data.

Without an ethical framework, AI tools for recruitment risk reinforcing bias, damaging trust, and exposing the candidates of an organisation to digital exclusion. By placing ethics at the centre, companies show candidates that integrity matters as much as efficiency. At a minimum, an ethical approach should cover:

- Fairness and non-discrimination must be ensured by checking for adverse impact and reviewing how the use of AI impacts accessibility.

- Transparency and explainability, meaning that candidates should know when AI is used and how it supports and influences decisions in the hiring process.

- Ensuring human oversight and meaningful review, avoid “solely automated” decisions for outcomes that significantly affect people. Under UK GDPR, people have rights related to automated decision-making, which you can learn more about by reviewing the ICO guidance.

- When it comes to data minimisation and retention, collect only what’s necessary, clearly define retention periods and ensure data stays within controlled environments (not public AI services).

- As candidate use of advanced AI grows, fraud risks rise and must be mitigated. It’s important to clearly define these outcomes and make the resources available to candidates so they are informed about your use of and stance on AI usage in the recruitment process. It is also important for recruitment teams to stay informed about the latest developments of AI tools to stay vigilant.

Restoring integrity: AI & Recruitment - practical steps from Jamie Betts’ session at IHR Live

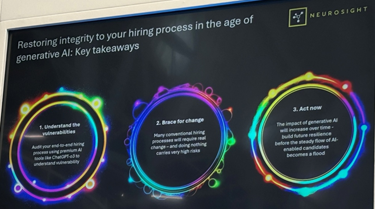

At IHR Live London 2025, Jamie Betts, founder of Neurosight, delivered an insightful session about how to build a hiring process that’s truly ChatGPT-proof, as well as restore trust and integrity from application to offer. Jamie shared these practical steps:

- Understand the vulnerabilities - audit your end-to-end hiring process using secure AI tools and understand vulnerabilities in your recruitment process.

- Brace for change - many conventional hiring processes will require real change - and doing nothing carries very high risks.

- Act now - the impact of generative AI will increase over time - build future resilience before the steady flow of AI-enabled candidates becomes a flood.

How does full reliance on AI in recruitment affect candidate perception?

Research and industry reports highlight a trust gap. While many employers consider adopting AI, candidates often worry that AI will judge them unfairly or hide decisions behind algorithms, which don’t always offer clarity on how decisions are made. A recent industry survey from Gartner indicates a meaningful proportion of candidates distrust AI evaluation, with just 26% of job applicants trusting AI will fairly evaluate them. That trust gap can increase drop-off rates, reduce offer acceptance and damage employer brand.

At the same time, candidates increasingly use AI themselves when applying for roles (e.g. resume/cover-letter generation, interview preparation), which complicates the integrity of assessments and increases fraud risk. Employers should expect both reduced trust and new forms of candidate behaviour.

Talent Acquisition Professionals are required to stay more vigilant than ever, especially during online video interviews, due to the increasing use of ‘’deepfake’’ AI tools, which make it easy for another person to attend on behalf of the candidate or allow the candidate to easily access information, such as prompts, during assessments.

The use of AI in recruitment and AI tools is continually evolving. The future of recruitment isn't about choosing between humans or AI - it's about using AI to give talented recruiters their time back. When AI handles data extraction, removes time-consuming administration tasks, and checks for bias, recruiters can focus on what they do best: understanding roles, assessing potential, and building relationships. That's the approach we are taking at Eploy - practical AI that enhances human capability without removing human judgement.

If you want to explore the topic of AI in recruitment further, read our insight ‘Can AI Automation Tools Solve Candidate Sourcing Challenges’ here.

What does AI mean in practice? TA professionals share their thoughts on AI in Recruitment

Eploy recently joined The Talent Labs at their Manchester Collaborate Event, hosting AI Hackathon sessions with the attendees.

The event brought together TA professionals from different sectors to discuss and deep dive into what AI really means in practice, the opportunities it offers, the risks it raises, and the steps needed to use it responsibly. Below, we share some of the key takeaways. To access the rest, you can download the full report for free here.

AI in Practice

Participants of the Hackathon shared a range of tools already shaping recruitment, shedding some light on what AI tools are actually used in practice. Here are the tools used by TAs:

- Chatbots for candidate engagement and FAQs

- Interview transcription tools improving documentation and feedback

- Prompt-based co-pilots supporting advert writing and candidate outreach

- ATS/CRM automation streamlining scheduling and record-keeping

These AI tools have reduced admin but blurred the lines between automation and true AI.

Transparency & The Blackbox Challenge

A key concern of the Hackathon was the opacity of AI tools, particularly large language models, where the decision-making process isn’t clear. Recruiters questioned:

- Where does candidate data go?

- How are algorithms ranking applicants?

- What are our disclosure obligations?

Participants agreed that trust and transparency must underpin any responsible use of AI in talent acquisition.

The Quality Vs Quantity Dilemma

While AI tools increase candidate volume, many noted a drop in quality and engagement:

- High volumes of low-fit applicants

- Senior candidates disengaged from chatbots

- Recruiters spending more time filtering poor matches

Download the full ‘AI in Talent - Hackathon Insights’ report, and gain access to comprehensive AI insights, based on the experiences of real Talent Acquisition pros.

You may also be interested in these resources:

Since you read our 'Using AI tools in Recruitment' insight, you may also want to join Eploy's Time for Talent newsletter and explore our comprehensive catalogue of Webinars and Videos.

/Positive-trading-banner.png?center=0%2C0&height=175&heightratio=1.915151515151515&width=336)

/Eploy-Team-3-News-banner.png?center=0%2C0&height=199&heightratio=1.7865168539325842&width=356)